Creating engaging video ads has traditionally meant long timelines, high production costs, and endless coordination with models, crews, or agencies. For most content creators and brands, producing authentic UGC-style ads at scale is either too expensive or simply too slow to keep up with the demands of modern social platforms. That’s where the Nano Banana + n8n automation changes everything. By connecting Google’s Nano Banana (Gemini 2.5 Flash Image) API for image generation with Kling AI for video transformation and orchestrating it all through n8n, this system turns a single product photo into scroll-stopping short videos in minutes.

For teams focused on editorial content instead of ads, we’ve also built a workflow for automating Napkin visuals with n8n and Playwright, perfect for turning inline image prompts into Napkin-style graphics and publishing them directly to WordPress.

There are no models, no video crews, and no $10K agency retainers, just an always-on factory that generates consistent, brand-ready UGC-style content. Nano Banana delivers hyper-realistic visuals with unmatched character consistency, Kling AI animates them into professional video ads, and n8n ensures quality control and automated delivery. While others spend $500 or more per UGC video, this pipeline delivers unlimited variations for pennies, giving creators 24/7 content production and complete creative control.

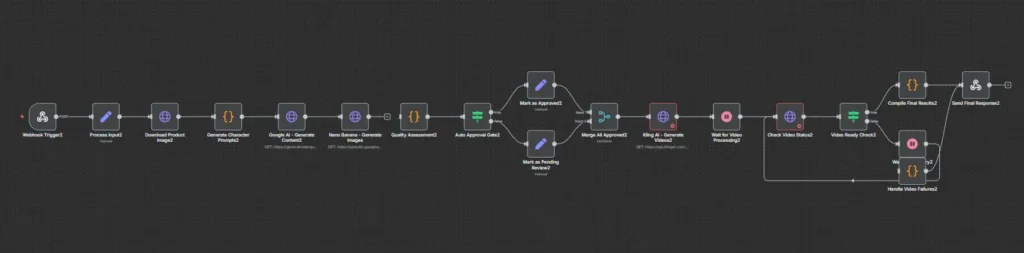

How does the n8n workflow actually work?

We used n8n as the orchestration engine. This allowed us to chain APIs deterministically:

- Webhook Trigger – receive product image + brand style.

- Gemini (Google AI Studio) – refine prompts for realism & brand tone.

- Nano Banana (Gemini 2.5 Flash Image) – generate consistent, photoreal images of the product in context.

- Quality Assessment + Auto Approval Gate – filter only high-scoring outputs.

- Kling AI – transform approved images into 15-second, 9:16 UGC-style videos.

- Retry Loop & Normalization – ensure successful video generation with bounded polling.

- Respond to Webhook – return structured JSON with video URLs, thumbnails, and costs.

Where Vyrade Fits and Why It Matters

Vyrade’s mission is to help creators and teams discover, compare, and orchestrate AI workflows. This UGC Ad Factory use case shows exactly why that matters:

- No more tool fatigue: You don’t have to manually juggle APIs.

- Reusable workflow template: Import once, adapt for any brand.

- Open ecosystem: Swap Ideogram for DALL·E, or Kling AI for Runway ML, without breaking the flow.

For content creators, this means one click → consistent, on-brand video ads.

How can I set this up technically?

Workflow JSON:

How to Import in n8n

- Go to your n8n dashboard → Workflows → Import from File

- Upload the JSON

- Add credentials:

google-ai-creds→ Query Auth (key=YOUR_GOOGLE_AI_KEY)kling-ai-creds→ Header Auth (Authorization: Bearer YOUR_KLING_KEY)

What are the key stages of the workflow?

1. Webhook Trigger

Receives a request with the product details (like name, image URL, and style) to kick off the workflow.

2. Process Input

Cleans up the incoming data, applies defaults (for example, number of variations or style), and validates that everything is in the right format.

3. Download Product Image

Fetches the product image file from the provided URL so it can be passed through later steps.

4. Generate Character Prompts

Creates descriptive prompts for different character variations (e.g., age, gender, setting) to guide image generation.

5. Google AI Studio (Gemini)

Refines those prompts into polished, brand-safe instructions that image generators can use effectively.

6. Image Generation (Nano Banana – Gemini 2.5 Flash Image)

Takes the refined prompts and produces photorealistic images of the product being used in context, with unmatched character consistency.

7. Quality Assessment

Reviews the generated images, attaches the original metadata (like which variation they belong to), and assigns a quality score.

8. Auto Approval Gate

Automatically passes high-quality images into the next stage, while setting lower-scoring ones aside for review if needed.

9. Kling AI Video Generation

Transforms approved images into short UGC-style videos (for example, 15 seconds, vertical 9:16 format).

10. Normalize Kling ID

Ensures the correct video ID is captured so the workflow can keep track of each video during processing.

11. Wait and Check Video Status

Polls Kling AI to see if the video is ready. Retries up to a set limit if it’s still processing.

12. Compile Final Results

Bundles all completed videos into one structured response, including URLs, thumbnails, quality scores, and cost estimates.

13. Respond to Webhook

Sends the finished results back to the caller in a clean, ready-to-use format.

Configuration Matrix

| Setting | Where | Example | Notes |

|---|---|---|---|

product_image_url | Webhook body | https://…/serum.png | Prefer ≥1024×1024 |

variations | Webhook body | 1–3 | Controls character variety |

brand_guidelines.style | Webhook body | modern | Drives aesthetics in prompts |

| Gemini key | Credential | queryAuth: key | Google AI Studio (Nano Banana) |

| Kling key | Credential | Authorization: Bearer | Required for video generation |

Optional Variants

- Swap Image Provider: DALL·E 3 (OpenAI) or Stable Diffusion (Replicate) with the same node shape.

- Manual Review: Send preview images to Slack/Email for one-click approve/deny.

- Deferred Mode: Respond immediately with

job_id, post results to a callback URL when done. - Persistent Characters: Save a traits/seed object to render the same model across campaigns.

Costs & Runtime

- Images: $0.02–$0.08 per variation (Nano Banana dependent)

- Videos: $0.10–$0.25 per 15s 9:16 (Kling AI dependent)

- Runtime: 1–3 minutes end-to-end, depending on queue & retries

Troubleshooting

- Poll never completes: Increase max attempts slightly or add a longer wait; verify

video_idnormalization. - Lost metadata (variation/character): Ensure the QC node re-attaches original fields after HTTP nodes.

- Blurry / off-brand images: Tighten lighting/composition directives; set a minimum resolution.

- Provider outages: Add a fallback image-gen provider and branch on HTTP status.

- Webhook timeout: Move to deferred mode for scale.

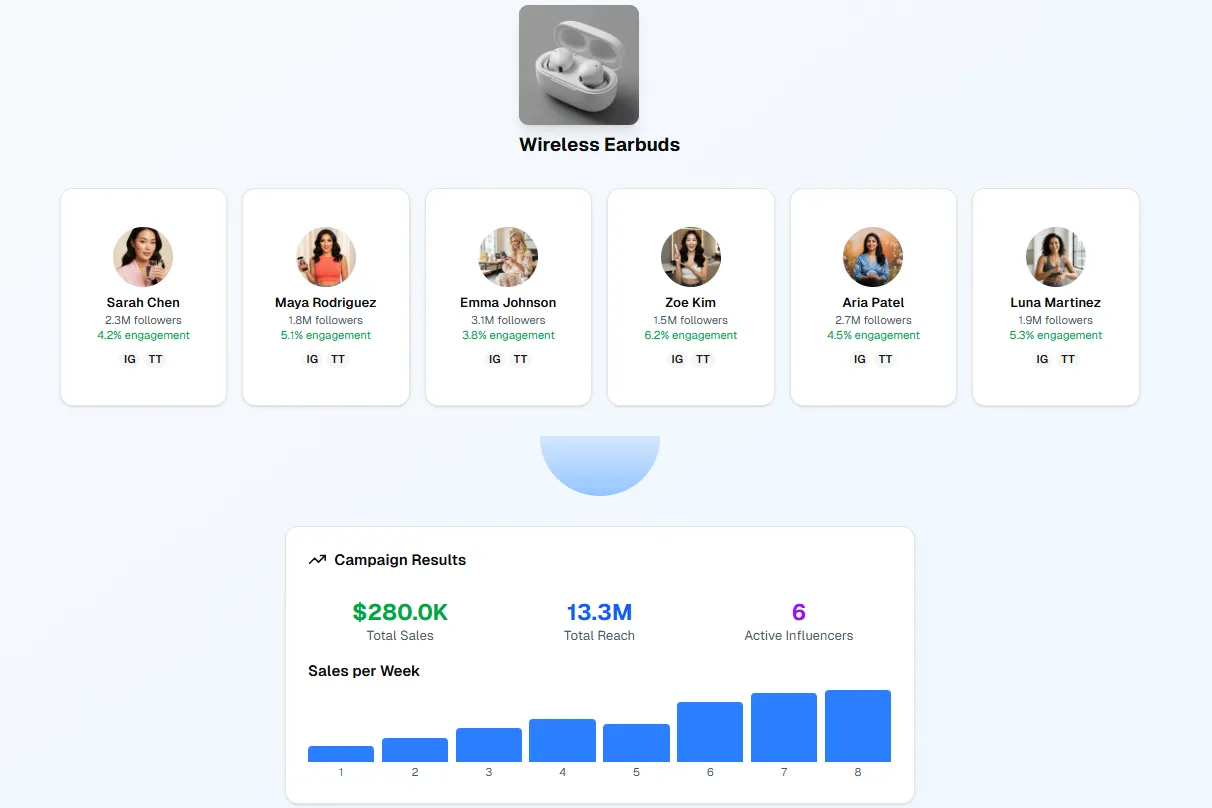

What results can content creators expect?

- Speed: From hours/days → minutes.

- Cost: From $500+ per UGC video → pennies.

- Consistency: Same product/character across all assets.

- Scalability: Run for hundreds of products concurrently on cloud-hosted n8n.

This isn’t a one-off demo. It’s a repeatable UGC Ad Factory content creators can trust to deliver at campaign scale.

FAQ

With this n8n workflow, you only need a single product image URL. The pipeline automatically generates photorealistic models (via Nano Banana) and then turns them into dynamic short videos using Kling AI.

n8n doesn’t generate videos by itself, but it orchestrates the process. By chaining APIs like Nano Banana (image generation) and Kling AI (video generation), n8n becomes the central automation hub that delivers ready-to-publish product videos.

Upload your product image → the workflow creates realistic variations with consistent characters → sends them to Kling AI → outputs a 15-second 9:16 product video with thumbnails and metadata.

This workflow combines AI image generators with AI video models. The product image is reimagined in authentic, UGC-style scenes and Kling AI animates it into an engaging video ad, all automated via n8n.